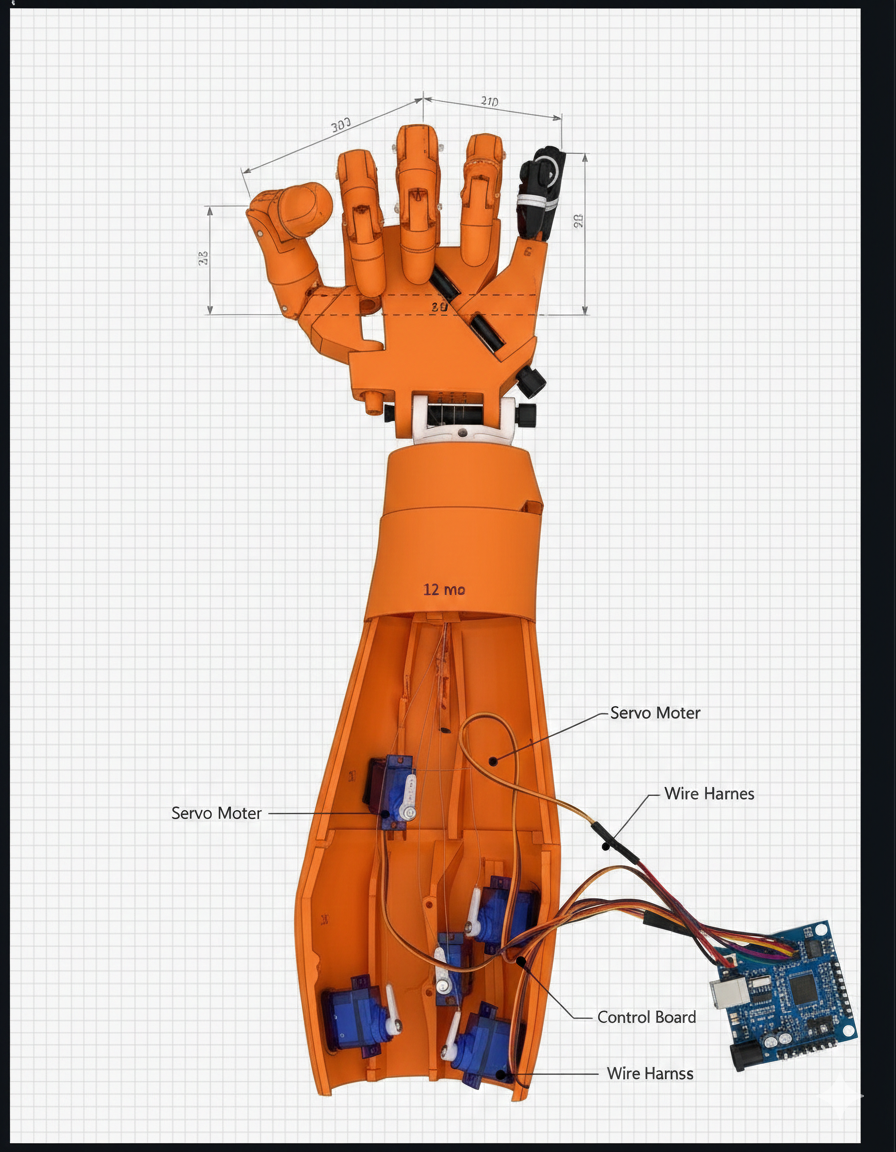

AI Vision-Controlled Robotic Hand

Real-time computer vision system integrated with robotic hardware for gesture-based control. Combines deep learning, embedded systems, and sensor fusion to bridge human intent with machine execution.

Exploring the frontiers of machine learning, computer vision, and generative models. Bridging theory with practical application.

I am an AI Engineering Master's student focused on building intelligent systems that connect machine perception with real user experiences.

My work combines computer vision, sensor data processing, and applied machine learning with mobile development to turn AI ideas into practical applications. From vision-controlled robotics to AI-powered fitness apps, I enjoy transforming complex technology into usable products.

I am especially interested in creating AI-driven mobile experiences that bridge real-world data and everyday interaction.

Real-time computer vision system integrated with robotic hardware for gesture-based control. Combines deep learning, embedded systems, and sensor fusion to bridge human intent with machine execution.

Cross-platform mobile fitness application with AI-driven workout recommendations. Combines Flutter development, Firebase backend, and Python-based analytics for personalized training insights.

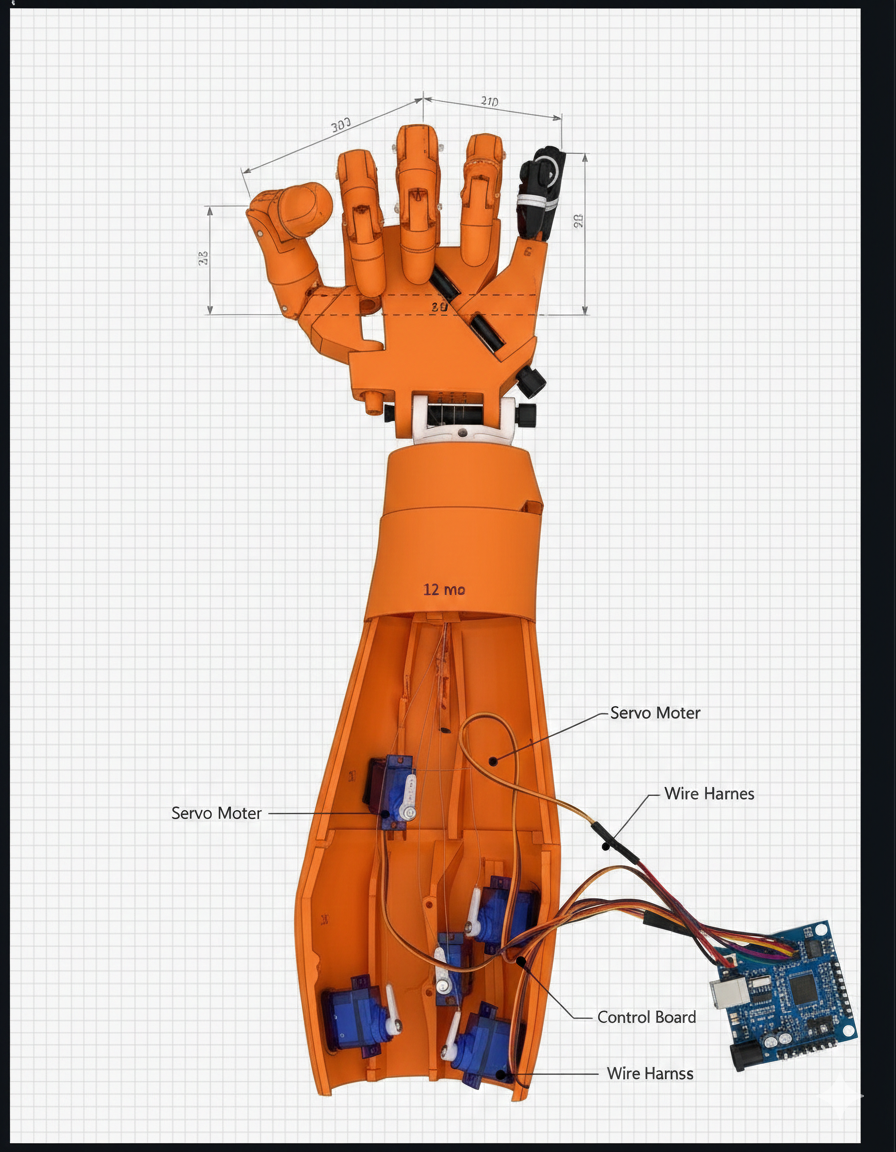

Real-time environment perception and mapping system using LiDAR sensors. Developed during industry internship, combining sensor processing, SLAM algorithms, and Gazebo simulation for robotics navigation.

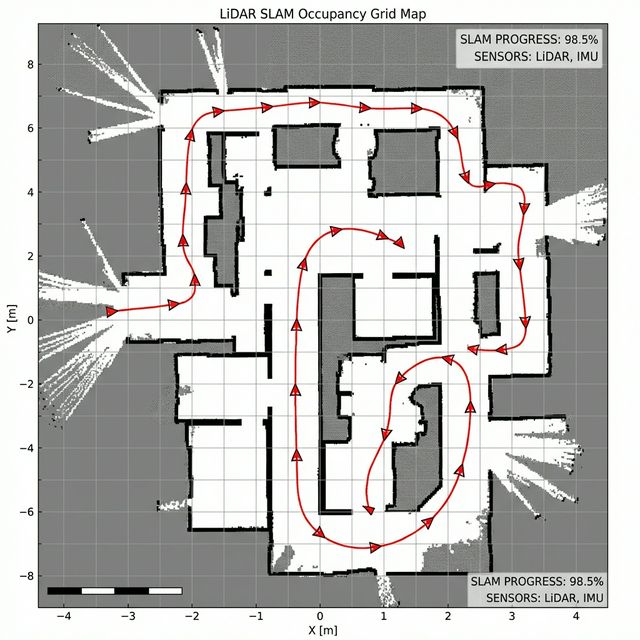

Industrial IoT data engineering system for real-time smart water meter monitoring. Developed during internship at Baylan Water & Energy Meters, featuring payload decoding, multi-interface architecture, and event-driven data processing.

Currently working on exciting new AI research projects

I'm always interested in discussing AI research, collaboration opportunities, or innovative projects. Feel free to reach out!